The mortgage and real estate infrastructure crisis that tokenization can actually fix

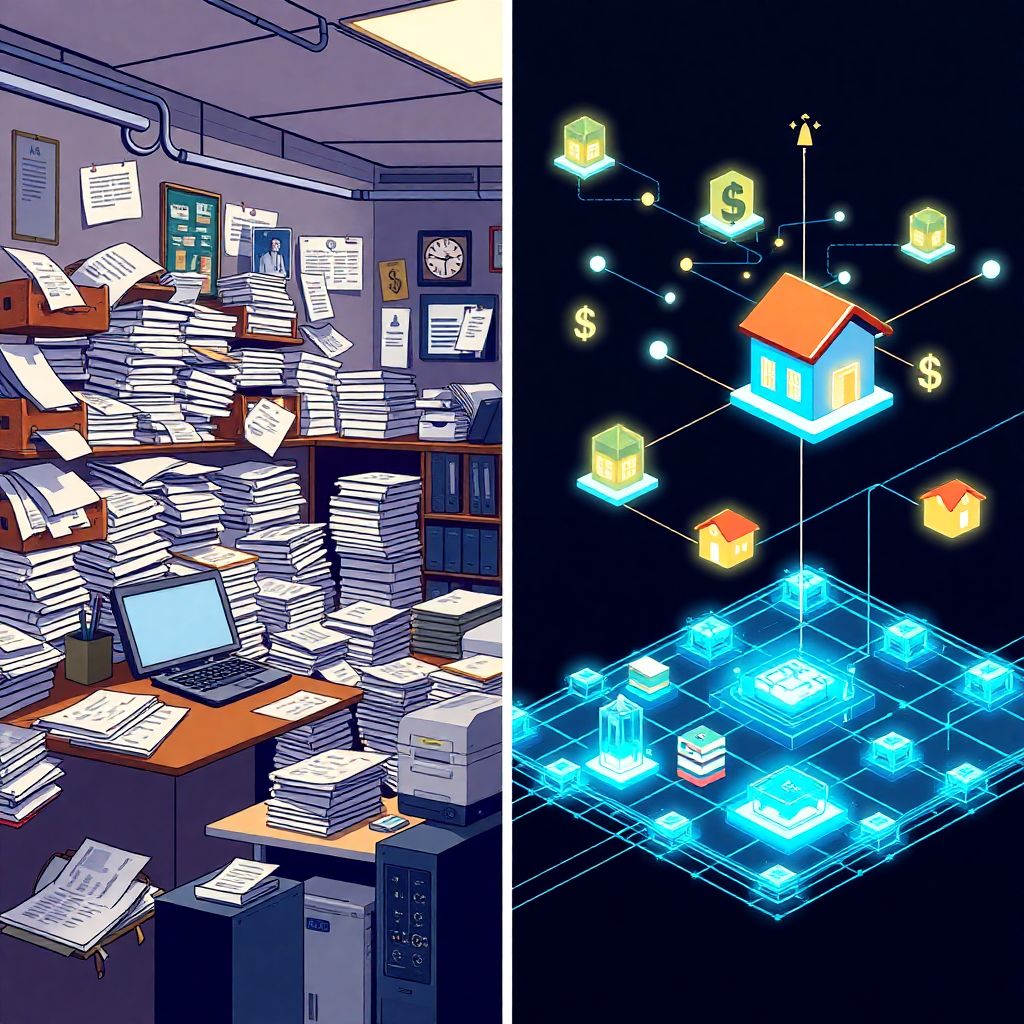

Mortgage and real estate finance sit at the core of the global economy, yet the rails that support this market look more like a patchwork of 20th‑century workflows than the backbone of a multitrillion‑dollar asset class.

Take Canada as one example: outstanding residential mortgage credit has surpassed $2.6 trillion, with more than $600 billion in new mortgages originated every year. A market of that scale should operate on infrastructure capable of real‑time verification, secure and standardized data sharing, and rapid movement of capital. Instead, the system still runs on PDFs, email attachments, legacy forms, and siloed databases.

That mismatch is not a minor inconvenience. It is a structural weakness that amplifies risk, inflates costs for borrowers and lenders, and constrains how capital can flow into and through housing finance.

Tokenization — the representation of financial rights and data as programmable digital units — is often described in speculative or buzzword‑heavy terms. In mortgage and real estate, however, it is less about marketing and more about plumbing: transforming fragmented, document‑centric workflows into a unified, auditable, data‑centric infrastructure.

—

A data problem disguised as a process problem

The biggest obstacle in mortgage and real estate finance is not a lack of capital or insufficient borrower demand. It is disjointed, poorly structured data.

Industry analyses consistently show that a large share of origination and servicing costs comes from humans doing what machines should be doing: reconciling data, correcting inconsistencies, retyping the same information into different systems, and chasing missing documents.

One notable study by LoanLogics found that roughly 11.5% of mortgage loan data is either incomplete or inaccurate. That might sound small, but at market scale it translates into repeated verification cycles, manual exception handling, downstream corrections, and billions of dollars in avoidable friction — an estimated $7.8 billion in added consumer costs over ten years.

Information does not move as structured, reusable data. It moves as artifacts: PDFs, scans, spreadsheets, email threads, portal uploads. At each stage — broker, lender, underwriter, insurer, servicer, investor, regulator — data is re‑entered, re‑interpreted, and re‑checked. There is no universal system of record for the life of a loan, only scattered snapshots.

This fragmentation ensures inefficiency:

– Verification takes days or weeks.

– Errors are common and compound over time.

– Historical data is hard to query or analyze.

– Even large institutions struggle to reconstruct complete, machine‑readable loan histories.

The industry has not truly digitized mortgages. It has simply converted paper processes into electronic form without changing the underlying model. Tokenization targets precisely this failure by shifting the foundational unit of record from documents to structured, verifiable data.

—

What tokenization actually changes

Tokenization is not just about putting assets “on a blockchain.” It is about how key information is represented, secured, governed, and shared. In a tokenized mortgage or property record, the critical attributes of the asset and the loan are captured as structured, interoperable data objects, not as static files.

Regulators around the world are tightening expectations around data quality, traceability, and audit trails. They increasingly demand not just access to information, but clear evidence of its origin, integrity, and any changes made over time. Legacy document‑based systems were never designed to provide this level of assurance at scale.

Tokenization addresses this by:

– Converting loan and asset information into consistent, machine‑readable records.

– Recording those records on a shared, tamper‑evident ledger.

– Allowing each stakeholder to reference the same canonical data, instead of maintaining separate, inconsistent copies.

Each key data point — borrower income, employment history, credit attributes, collateral details, property encumbrances, loan terms, payment history — can be validated once, cryptographically anchored, and then reused across the ecosystem without manual re‑entry.

Security is not an add‑on in this model; it is built into the data layer:

– Cryptographic hashing ensures that if any record is altered, the change is detectable.

– Immutable logs create an end‑to‑end audit trail of who did what, and when.

– Smart permissions allow granular control over which participants can see which data, for what purpose, and for how long.

Instead of repeatedly uploading sensitive documents into multiple portals, counterparties can query or reference the same underlying tokenized record with controlled, role‑based access.

—

From “secure documents” to secure infrastructure

Today, security and transparency are usually bolted on top of old workflows. Institutions encrypt emails, password‑protect documents, or add multi‑factor authentication to portals — all important steps, but none of them fix the fact that the underlying records are still fragmented, inconsistently formatted, and difficult to audit.

Tokenization reverses this logic. Rather than securing the edges of legacy systems, it secures the core representation of the asset and its data. The loan, the property rights, the payment streams, and the servicing obligations can all be modeled as interlinked digital tokens or data objects on shared infrastructure.

This has several implications:

– Auditability by design: When every update is recorded on a tamper‑evident ledger, regulators, investors, and internal risk teams can reconstruct the life of a loan or asset without combing through dozens of systems.

– Consistency across participants: All authorized parties are aligned to the same source of truth, drastically reducing reconciliation work.

– Policy as code: Compliance rules, eligibility conditions, and approval workflows can increasingly be embedded into the data layer itself (for example, through smart contracts), limiting room for error and manual misinterpretation.

In practice, this means that “what happened” to a mortgage or property over its life cycle is not pieced together from scattered files; it is visible as a continuous, verifiable history.

—

Liquidity in an inherently illiquid asset class

Real estate is among the most illiquid mainstream asset classes. Even when underlying properties are attractive and borrowers are performing, the mechanisms for moving, slicing, and reallocating capital remain cumbersome.

Mortgages are often locked into rigid structures, and secondary markets depend on heavy documentation, manual due diligence, and batch‑based settlement. This slows down capital rotation and creates significant barriers to entry for smaller investors and new funding models.

Tokenization opens a path to change this without undermining regulatory protections:

– Standardized, machine‑readable loan profiles reduce the friction of pooling, pricing, and trading mortgage‑backed assets.

– Programmable cash‑flow tokens can represent specific payment streams or risk tranches, facilitating more granular risk transfer.

– Faster, more transparent settlement becomes possible when transaction logic is encoded on a shared ledger rather than executed through a series of disconnected middle and back‑office processes.

By improving data quality and traceability, tokenization makes due diligence more efficient. Investors can query the underlying attributes of loans or portfolios directly, rather than relying solely on static reports and trust in intermediaries.

Importantly, this is not about turning homes into speculative trading chips. It is about enabling capital to move more quickly and intelligently toward productive uses in housing finance — while maintaining, and in some cases improving, oversight and consumer protection.

—

Incremental digitization vs. infrastructure‑level change

Over the past decade, the mortgage industry has invested heavily in “digitization.” Borrowers can now apply online, upload documents via portals, and e‑sign some forms. On the surface, the experience has improved.

But beneath the interface, very little has changed. The same information still flows into siloed systems; the same manual checks occur in the background; the same batch processes run overnight. The result is a smoother front end sitting on top of the same brittle foundation.

Tokenization is different in kind, not just degree. It proposes an infrastructure‑level transformation:

– From documents to data objects.

– From local records to shared, synchronized ledgers.

– From after‑the‑fact audits to real‑time, verifiable histories.

That shift does not happen overnight. It is likely to emerge in phases:

1. Data standardization and mapping: Defining common schemas for key mortgage and property data elements.

2. Hybrid models: Running tokenized records in parallel with existing systems, using them first for internal reconciliation and risk management.

3. Regulated tokenized products: Introducing carefully structured tokenized mortgage pools or real estate funds within existing regulatory frameworks.

4. Gradual migration of core processes: Moving origination, servicing, and secondary market activity onto shared, token‑native infrastructure as confidence and regulatory clarity grow.

Crucially, tokenization does not require an all‑or‑nothing leap. It can begin by quietly replacing the most painful reconciliation points and then expand as value becomes clear.

—

How tokenization changes roles and incentives

A shift of this magnitude inevitably affects how different participants operate:

– Lenders and originators gain cleaner pipelines, lower error rates, and more scalable operations. Their incentive is to cut processing costs and compete on speed and reliability.

– Servicers benefit from standardized, continuously updated data, reducing disputes and improving portfolio oversight.

– Investors obtain more transparent access to underlying loan characteristics, supporting better pricing and risk management.

– Regulators see a clearer, more timely picture of systemic exposures and consumer outcomes, with less dependence on lagging reports.

– Borrowers may not see the technology, but they feel its impact through faster decisions, fewer redundant document requests, and potentially lower overall costs.

For tokenization to succeed, governance and incentives must be designed carefully. Shared infrastructure only works if participants trust how rules are set, updated, and enforced. That means robust standards, clear accountability, and alignment between private innovation and public oversight.

—

Addressing common concerns

Skepticism around tokenization is healthy, especially in a market as sensitive as housing. Several concerns frequently arise:

– “Isn’t this just crypto hype?”

Tokenization in mortgage and real estate does not require speculative tokens or unregulated exchanges. It is primarily about data modeling and shared ledgers, implemented within existing legal and regulatory frameworks.

– “What about privacy?”

Properly implemented, tokenization can enhance privacy. Instead of sending full documents to multiple counterparties, participants can be granted selective access to only the fields they need, and for limited periods. Advanced cryptographic techniques can further limit exposure of personally identifiable information.

– “Will this replace institutions?”

No. It reshapes infrastructure, not the need for underwriting expertise, servicing operations, or regulatory oversight. Banks, lenders, and regulators remain central — but they operate on a more reliable and efficient foundation.

– “Is the technology mature enough?”

Underlying components such as permissioned blockchains, digital identity, and standardized data schemas are already in production in other financial sectors. The challenge is less about raw technology and more about coordinated adoption, governance, and standards.

—

Practical first steps for the industry

For market participants, the path forward does not have to begin with radical experimentation. Several pragmatic steps can lay the groundwork:

1. Invest in data quality

Clean, well‑structured data is the prerequisite for any tokenization effort. Mapping existing fields, resolving inconsistencies, and adopting common schemas are immediate wins, with or without blockchain.

2. Pilot tokenized records in confined environments

Institutions can start by using tokenized representations of loans or property interests for internal reconciliation between departments or with a limited set of counterparties.

3. Engage regulators early

Collaborative pilots and regulatory sandboxes can help ensure that tokenized models respect consumer protections, capital rules, and disclosure requirements from day one.

4. Align with industry standards bodies

Developing shared taxonomies and technical standards will be critical to avoid creating a new generation of incompatible “digital silos.”

5. Focus on clear value cases

Early initiatives should target measurable pain points — for example, reducing repurchase risk through better data lineage, lowering error rates in servicing transfers, or accelerating settlement in specific secondary transactions.

—

Why “doing nothing” is the riskiest option

The existing mortgage and real estate infrastructure has persisted for so long that its flaws can feel inevitable. But as volumes grow, regulations tighten, and macro conditions become more volatile, the cost of relying on brittle, document‑centric systems rises.

– Operational risk increases when critical processes depend on manual workarounds.

– Compliance risk grows as regulators demand real‑time insight instead of quarterly snapshots.

– Strategic risk emerges when new entrants can build more agile, data‑native platforms from scratch.

The choice is not between tokenization and stability. It is between gradually rebuilding the foundation of mortgage and real estate finance, or continuing to layer complexity on top of an outdated core until it becomes unsustainable.

—

A realistic vision for tokenized housing finance

Tokenization will not magically solve affordability challenges, erase credit risk, or eliminate downturns. What it can do is modernize the hidden infrastructure that underpins how housing is financed and how capital moves through the system.

By replacing fragmented records with unified, secure, and programmable data, the industry can:

– Reduce friction and error in origination and servicing.

– Improve transparency for investors and regulators.

– Enable more flexible, targeted capital allocation.

– Lower structural costs that are ultimately borne by borrowers and taxpayers.

In that sense, tokenization is less a speculative bet and more a long‑overdue upgrade to the operating system of mortgage and real estate finance. The asset class is already one of the largest on the planet. It is time for the infrastructure behind it to catch up.

The opinions expressed here reflect the perspective of the author alone and should not be taken as representing the views of any particular publication or institution.